Automated Kafka ops with Kafka Topology Builder and Gitops

One of the common questions when teams start working with technologies like Apache Kafka is how to automate and simplify the common…

One of the common questions when teams start working with technologies like Apache Kafka is how to automate and simplify the common operations tasks. This task can be such as creating topics, managing their configuration or for example adding the necessary ACLs for a given application.

While many ways can get you to Rome, one of the options is to use Git, Jenkins and a bit of code to solve this problem. Gitops to the rescue.

If you are not aware of this approach, I do recommend reading this very good explanation.

In a nutshell:

GitOps is a paradigm or a set of practices that empowers developers to perform tasks which typically fall under the purview of IT operations. GitOps requires us to describe and observe systems with declarative specifications that eventually form the basis of continuous everything.

Source https://www.cloudbees.com/gitops/what-is-gitops

So with this we bring into the table:

Autonomy for teams, they can request and fulfil their own ops needs independently.

A declarative approach, users describe what they need and not how it is implemented, facilitating the solution of problems, keeping detailed implementation abstracted.

A centralised way to verify and control changes, providing an audit trail to fulfil change management requirements.

Have a centralised source of truth where the current state of the platform is represented.

In this article we’re going to describe a possible solution for this situation, answering questions such as:

How can we benefit from gitops with Apache Kafka and Confluent Platform?

How to use it with Confluent Cloud?

How to use it with your Kafka on-prem?

Kafka Ops with the Kafka Topology Builder

What is the Kafka Topology Builder

Kafka Topology builder is a tool where users can describe their needs (using a cluster description file(s)) and make sure their Apache Kafka cluster looks like it after applying the changes.

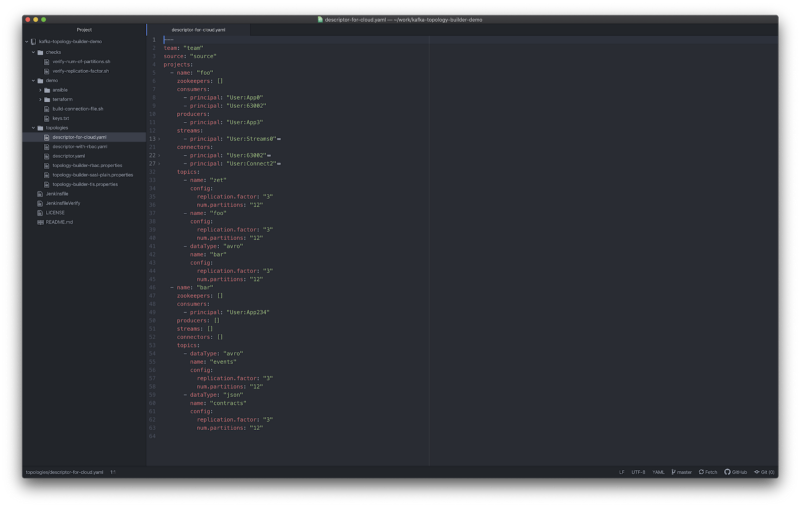

Based on a description file that can look like this:

A tool like this one can be a good starting point to build a GitOps strategy for Apache Kafka.

What can the Topology Builder do for you.

With the Topology Builder a user can do, at the time of this article, topic and ACL management. In future releases we will include support for managing your Schemas within Schema Registry in an automated methodology.

Topic Management

One of the most common tasks when different teams require to use a Kafka Cluster is to manage their topic needs. Things like making sure the right namespacing policy, or a resilient configuration is in place, are complicated to control when teams grow without causing either failures or an unnecessary overload of work for operations.

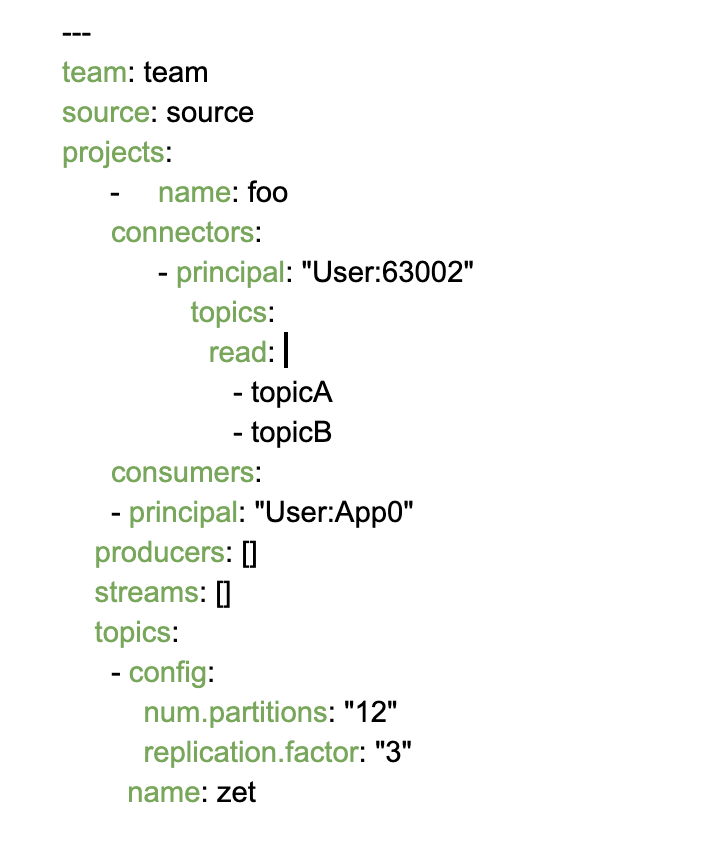

Based on the structured provided to the tool, see as example:

the Topology Builder tool is able to create an structured topic naming where:

All the first level attributes before the project will be appended in order of appearance.

Then the project name

After that the topic name

And by the end the data type

For example: team.source.bar.contracts.json

This strategy will simplify the topic naming strategy and simplify the need to know by the different clients, where they only need to add a section under each project required. As well this will provide a structure for having prefix ACLs when required.

And most important, the tool will make sure to:

Update configuration changes when required, for example the retention.ms is changed.

Manage topic creation and deletion, when required and configured.

So after each execution the cluster will look exactly like described in the source of truth.

NOTE: For this functionality the tool is using the AdminClient interface.

ACL Management

Managing access control is another of the very important and common everyday tasks when doing Kafka. The Kafka Topology Builder can help you manage as well ACLs in a structured and automatic way.

For ACLs it will be a similar thing as with the topics, as seen in the previous examples of a descriptor file, or here for a full reference. As a user you will describe what you need and the tool will do all necessary to fulfil it.

The Topology Builder will enable you to manage access control for:

Vanila Kafka Consumers and Producers. For applications that need to simply read or write into topics.

All the necessary rules for Kafka Connect to work, including read (sink connectors) and write (source connectors) for topics.

All the needed permissions for a Kafka Streams application to function properly, including read (source) and write (to) access.

This will allow most common requirements to be fulfilled for teams, from simple Consumers and Producers, to most complex Kafka Connect and Kafka Streams. Making the path to deployment more autonomous and easy for developer teams as well for operations.

At the time of this article the Topology Builder support doing access control with Kafka ACLs and Confluent RBAC systems.

In future releases this tool will include support for KSQL, Schema Registry and others.

How can you use it?

Currently the Kafka Topology Builder is available as different artefacts:

As an RPM package, available from here.

As a docker image, available from here.

As source code, available from here.

For example, if you have docker available, you can run the latest topology builder release like this:

$> docker run purbon/kafka-topology-builder:latest kafka-topology-builder.sh -help

Parsing failed cause of Missing required options: topology, brokers, clientConfig

usage: cli

— allowDelete Permits delete operations for topics and configs.

— brokers <arg> The Apache Kafka server(s) to connect to.

— clientConfig <arg> The AdminClient configuration file.

— help Prints usage information.

— quite Print minimum status update

— topology <arg> Topology config file.

An example with Confluent Cloud

Now that we have described what you can do with the Kafka Topology Builder, a small example of a full CI/CD pipeline is mandatory. For this exercise we’re going to use Confluent Cloud, a Kafka on the cloud.

First we need an idea of what are we going to build:

This picture shows the different steps from the git textual description, till the changes are applied in the cluster.

The necessary building blocks for this example can be found here.

How to build a Gitops solution

To build a solution, we need to:

A code repository where the topology description and checks are going to be available. For this we’re going to use Github, but to use other repository systems like bitbucket is as well possible.

Have a CI/CD pipeline that is able to listen to changes happening in a code repository. For this we’re going to use Jenkins, a powerful CI/CD open source project.

A Kafka cluster, for this we’re going to use a cluster in Confluent Cloud.

A place where the Kafka Topology Builder can run, the execution will take part within the CI/CD pipeline as a docker container.

NOTE: We require users to perform changes using a pull request mechanism, facilitating the automated pre-verification of the changes, change management and access control.

This will be the functionality:

A user (developer team) does a Pull Request proposing changes to the cluster topics, configurations or access control.

A Jenkins job will verify the changes are correct and report a green or red check back to Github.

Once the automated verification is ok, a human will do an extra check and merge the Pull Request if accepted.

Once the changes land in the master branch another jenkins job will automatically be triggered, pull the changes and run the topology builder with them.

Once this workflow is finished, the changes will be reflected in the Kafka cluster.

Let’s see how this would work for our example

First we need a repository where the Jenkins pipelines and topologies will exist, you can find this here.

NOTE: The PR verification step is built using the Github integrations plugin for Jenkins, but will not be included in this article to not make it longer than it already is. The reader can see it from here and here.

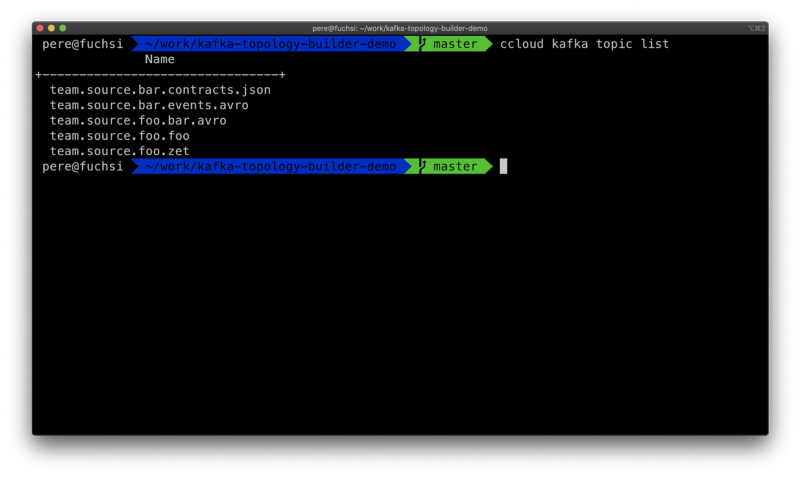

Let’s see the current status of the cluster:

List of topics in the cluster

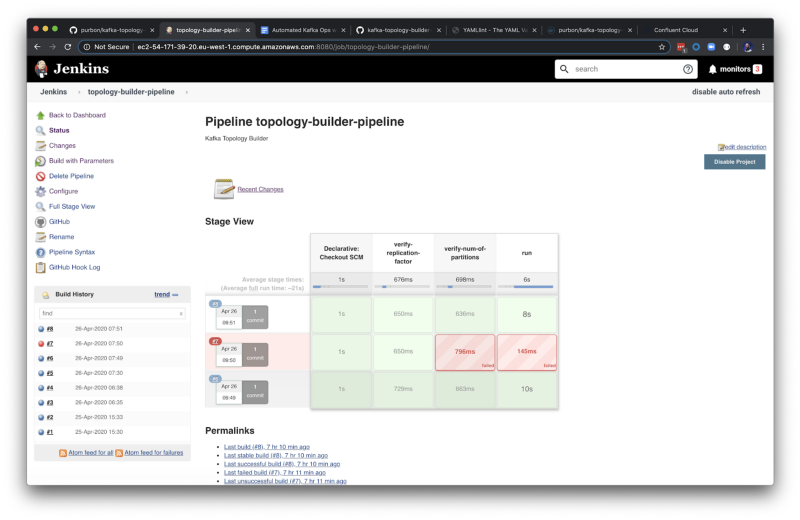

An available pipeline for Jenkins

The definition of the previous pipeline that the reader can see in the screenshot looks like this:

pipeline {

agent {

docker { image ‘purbon/kafka-topology-builder:latest’ }

}

stages {

stage(‘verify-replication-factor’) {

steps {

sh ‘checks/verify-replication-factor.sh ${TopologyFiles} 3’

}

}

stage(‘verify-num-of-partitions’) {

steps {

sh ‘checks/verify-num-of-partitions.sh ${TopologyFiles} 12’

}

}

stage(‘run’) {

steps {

withCredentials([usernamePassword(credentialsId: ‘confluent-cloud ‘, usernameVariable: ‘CLUSTER_API_KEY’, passwordVariable: ‘CLUSTER_API_SECRET’)]) {

sh ‘./demo/build-connection-file.sh > topology-builder.properties’

}

sh ‘kafka-topology-builder.sh — brokers ${Brokers} — clientConfig topology-builder.properties — topology ${TopologyFiles}’

}

}

}

}

Jenkins pipeline definition

In this pipeline we can see two different stages:

1.- Automated verifications such as required replication factor or number of partitions.

2.- The execution of the kafka topology builder, in this pipeline using the docker image.

So once the required changes will land master, this pipeline will run and apply the changes.

Let’s add a new topic and access control permissions and see how this looks like.

This are the changes performed:

Add a new topic named accounts inside the project foo.

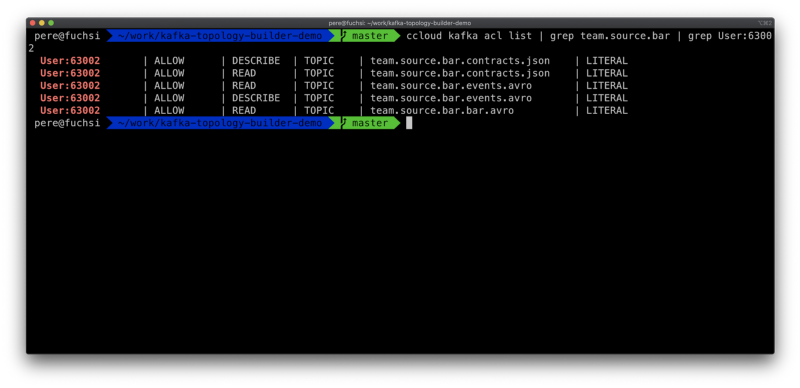

Add permissions for a new consumer (User:63002) in the project bar.

As soon as the changes will land the master branch, the pipeline will pick up them, validate and apply the changes to the cluster and look like this:

New topic accounts created.

New ACLs being created.

This tool is perfectly fit for Confluent Cloud, the only thing users need to remember is the way principles are used when creating ACLs. In Confluent Cloud, applications use service-accounts and this account will create a service-account-id.

This unique id is the identifier used to create the acls to access for the application. Remember, when using the Topology Builder with Confluent Cloud, your principal for the ACLs is each application service-account-id.

With Kafka Topology Builder you are able to build a fully functional Gitops solution to automate and manage your topics and access control (more in the future). Enabling your team to provide an autonomous solution for your users and enable a traceable change management for Kafka.

References

A Kafka Topology Builder demo.